A Simple Production-Ready AWS Cloud Platform

How to get your business started on AWS

This post is part of The Great Platform Engineering Series.

Be sure to check out my take on the Commitments of a Great Cloud Platform.

Earlier this year, I was contracted to work on a greenfield project — the dream.

They wanted me to design and build a cloud platform to host their microservices.

They had been struggling with the reliability of their legacy platform.

Like many organizations, they had limited headcount. So the solution should limit overhead.

To minimize engineering overhead, I will:

Put automation at the center of everything.

Prioritize managed services: less maintenance and complexity.

Prioritize technology known by the team: less of a learning curve, better ownership of the new platform.

Define everything as code: less clickOps.

To put reliability and availability at the core of our design, I will:

Build a strong monitoring and logging stack: You can’t improve what you don’t measure.

Deploy our infrastructure in a high-availability manner.

Build a system flexible enough to adapt to the workload.

In this post, I aim to build a High Level Design (HLD) for this solution.

For clarity, there are a few things that exist in the solution that I won’t mention, such as the different environment structure (Dev, Staging, Prod), the database logic, the DNS & certificate management, cost, and the pipelines. If there is interest, I’m happy to go into more details.

The Container Orchestrator

Kubernetes is not the right fit here — let’s not argue about it 🤓.

Once the client confirmed AWS was their preferred cloud provider, I immediately thought of AWS Elastic Container Service for my main container orchestrator.

ECS is a great hosting solution for straightforward requirements, moderate scaling needs, and when slight vendor-locking is acceptable. In other words, I would not recommend ECS for large deployments of inter-connecting services or when Multi-Cloud is a requirement, but it’s perfect for our use-case.

Another reason why I love ECS is the flexibility it allows with the underlying infrastructure. It works with both standard EC2 and Fargate (which is basically serverless EC2), and provides strong auto-scaling mechanisms.

As we want to keep the engineering overhead low, I chose ECS Fargate.

Bonus point: ECS Fargate is usually cheaper than most public cloud alternatives.

A Standard Network Design, Across Zones

Usually, network design isn’t included in the HLD, but I thought it’d be a good way to ease into the architecture.

Subnetting Is Never Fun

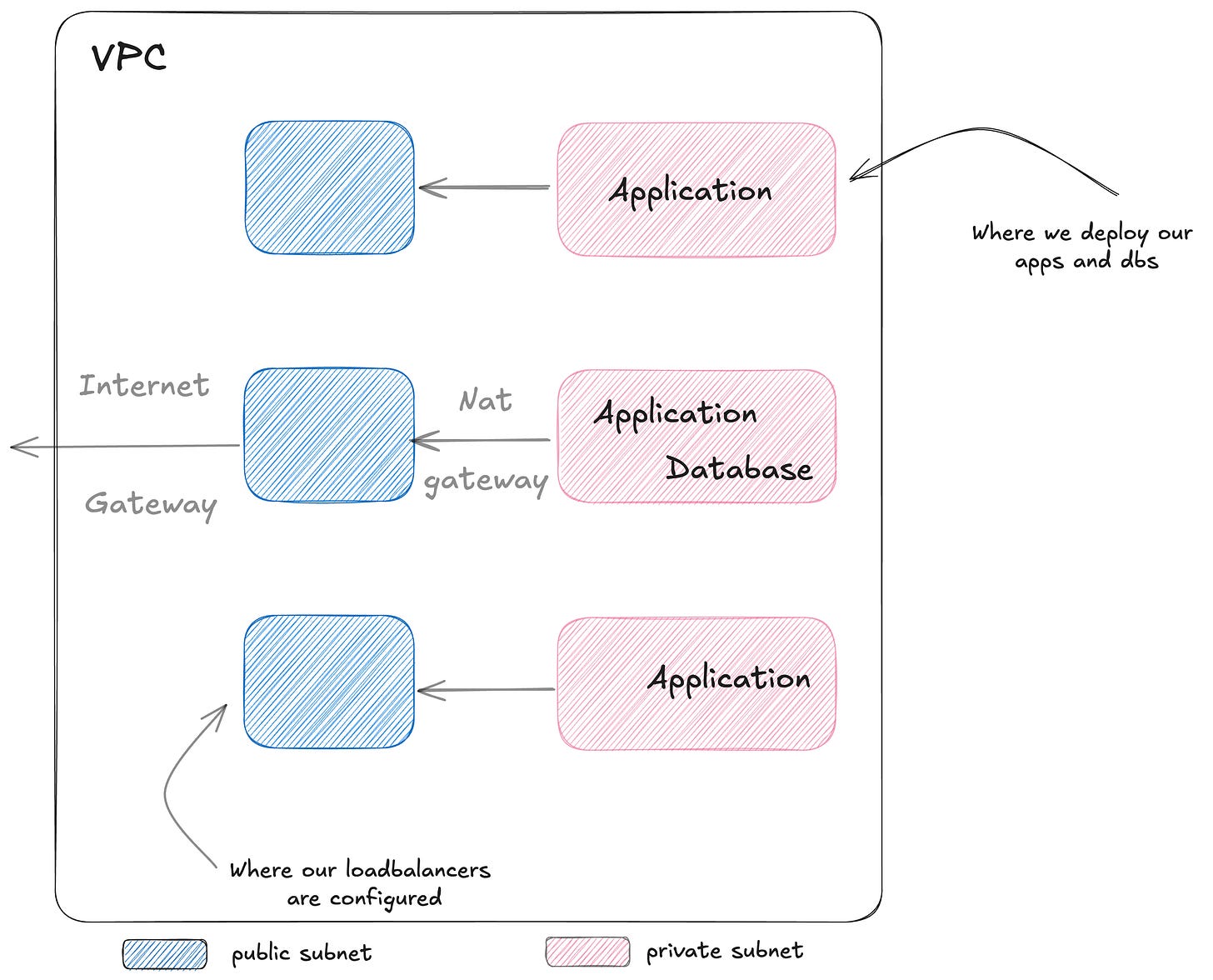

Nothing particularly opinionated here, we’ll stick to best practice.

We’ll have one public subnet and one private subnet per availability zone (AZ).

To further segregate our databases, we could also isolate them into their own extra private subnets.

This diagram is oversimplified and ignores Security Groups and NACL.

The applications are running in private subnets, and are isolated from the internet.

Requests from outside always come through a load balancer, which is running in the public subnet.

One Region, Multi-AZ

Whilst the client mentioned clients in both Europe and North America, I have advised to keep things contained to one region for now.

It lowers complexity, gives us more time to build a reliable system, and we could still use some cloud magic to lower latency, if necessary.

On Amazon Web Services, each region has three availability zones (datacenters).

Our solution will be deployed in the Ireland region (eu-west-1), and will span the three availability zones. This ensures redundancy and allows higher availability.

The Monitoring Stack

Prometheus > CloudWatch

CloudWatch is AWS’ monitoring and logging system. It has native integrations with most AWS services and can provide us with some valuable metrics. However, it’s not quite enough; we may want to monitor our applications and resources across various systems.

The industry standard is Prometheus, which is able to gather metrics from almost anywhere and instrumented applications. Here, the client’s app is already exposing metrics on its /metrics endpoint.

We face our first challenge: Prometheus can’t run on Fargate, due to its need for persistent storage. No problem, ECS allows us to use a mix of EC2 and Fargate. So we deploy Prometheus on EC2 and configure it to read from specific targets, via a prometheus.yml config file.

A standard approach is to use “exporters”, which connect to AWS and expose AWS metrics to be collected by Prometheus. I opted for both the ECS Exporter, for deep ECS-specific metrics, and the CloudWatch exporter for any other metrics coming from CloudWatch (e.g., loadbalancer).

Service Discovery for a Dynamic Configuration

Contrary to running Prometheus on Kubernetes, ours needs to be explicitly told where to gather the metrics from. As we don’t want to build an intricate and non-maintainable environment variables or file sharing solution to pass the prometheus.yml to the running container, we bake our config into the Dockerfile.

Because we’re experienced engineers, we don’t want to hard code IP addresses into our configuration file. Instead, we set up a Service Discovery Namespace and a Private Hosted Zone, then configure our ECS Service to automatically register its tasks’ IP addresses against this private domains (i.e., app.local).

That way, the exporters can just be referred by their own domain name (i.e.,cloudwatch-exporter.app.local)

Visualizing The Metrics

Prometheus is great, but it really becomes value-adding when you pair it with Grafana, the visualization and dashboard tool.

Our solution includes Grafana deployment to ECS, pre-configured to read metrics from Prometheus. Because the whole point of the platform is to support the internal team, I also included basic out-of-the-box Grafana dashboards.

The Logging Stack

OpenSearch, an AWS-Flavored ElasticSearch

For the logging stack we have more options. As we’re already on AWS, we may want to rely entirely on CloudWatch for Logs — everything is already natively integrated.

I chose to use OpenSearch (which is AWS’s Elasticsearch fork), as it is the logging standard in our world, performs better indexing than CloudWatch and can handle log transformation.

Just like ECS, OpenSearch comes in two flavors, running on distributed nodes and serverless. The cost is about the same for non-intensive usage, but OpenSearch Serverless is significantly simpler. It comes with the concept of Collection, which is a service ready to receive logs (and a dashboard endpoint).

Keeping our low-overhead commitment in mind, I chose to use OpenSearch Serverless.

Firelens: The Log Forwarder

OpenSearch receives and indexes logs, but it needs a log forwarder to send the logs. Common log forwarders include Logstash, Fluentd, FluentBit. From ECS to OpenSearch, it is recommended to use an AWS-flavored FluentBit called Firelens. Firelens runs as a sidecar in your ECS task.

Another way is to have logs transit from ECS to CloudWatch Logs, and then have a Lambda function automatically forward the logs to OpenSearch. I rejected this option, but let me know in the comments if you want me to explore it.

Visualizing The Logs

OpenSearch Collections come with their own dashboard. The client team can access it with either SSO or simple IAM authentication and authorization. In my solution, each standalone service is pre-configured to stream logs to its own OpenSearch Collection.

Piecing Everything Together

Let’s recap.

We have defined AWS resources: ECS Service & Tasks, EC2 instances, OpenSearch; and applications deployed: the client’s microservices, FluentBit, Prometheus, the metrics exporters, and Grafana.

Everything is either infrastructure as code or configuration.

Without going into details, I used Github Actions to:

Test (when possible), build and push the Docker Images to a Docker Repository (I like AWS ECR).

Run the infrastructure code (Terraform) against the relevant environment.

We can now put together a (simplified) High-Level Design of our production platform .

It is highly available, as our applications are deployed across three AZs.

It is reliable, as ECS is configured for self-healing and auto-scaling.

Additionally, we have configured a strong Monitoring and Logging stack.

And all of it is automated through Github Actions.

The diagram includes a few components I haven’t mentioned, such as RDS, ECR.

Whilst the client was satisfied with this architecture, I proceeded to fine-tune and harden this cloud platform over the following weeks. I used the first couple of application migrations from the legacy platform to this one to gather more feedback from the internal team, to further tailor the process and the documentation.

Let’s be honest with each other. Share your feedback. Raise any mistakes I made. Point me to great resources. Engage in conversations. Let’s be friends.